-

-

-

-

-

-  -

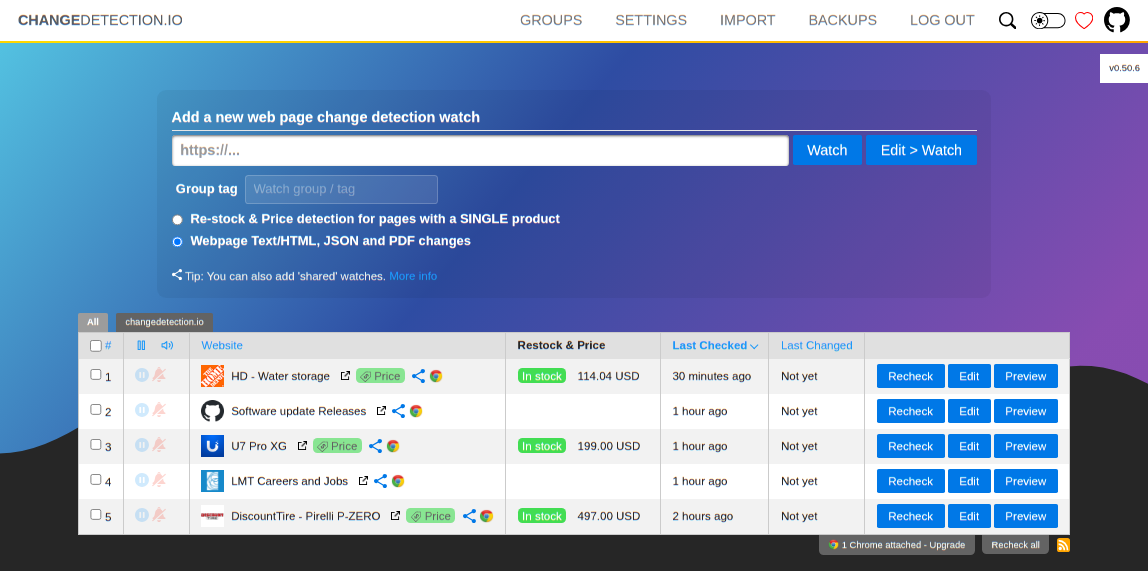

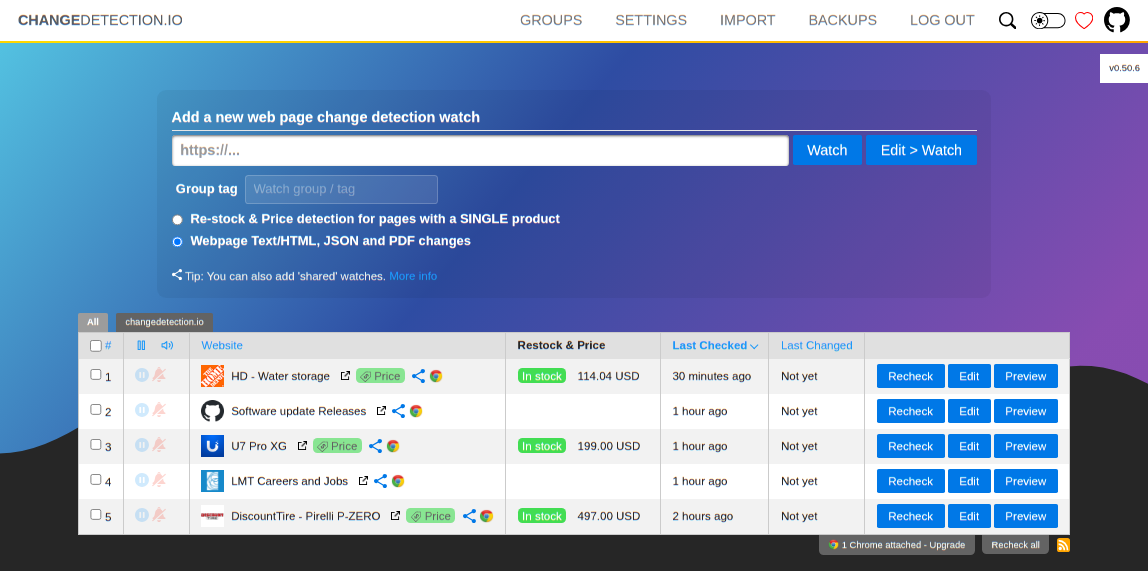

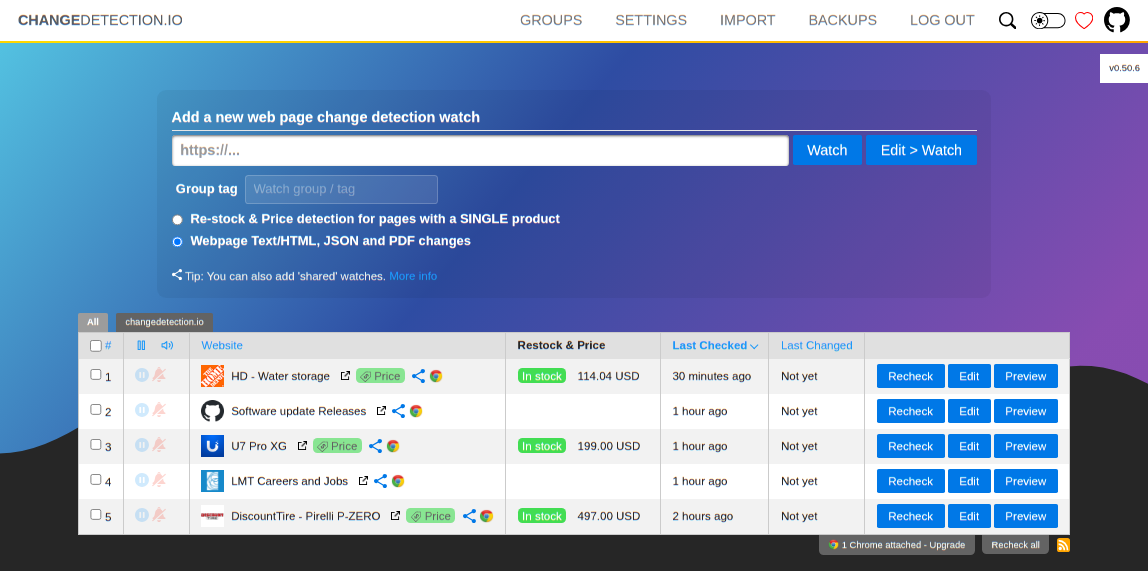

+## Web Site Change Detection, Monitoring and Notification.

-## Self-hosted open source change monitoring of web pages.

+Live your data-life pro-actively, track website content changes and receive notifications via Discord, Email, Slack, Telegram and 70+ more

-_Know when web pages change! Stay ontop of new information!_

+[

-

+## Web Site Change Detection, Monitoring and Notification.

-## Self-hosted open source change monitoring of web pages.

+Live your data-life pro-actively, track website content changes and receive notifications via Discord, Email, Slack, Telegram and 70+ more

-_Know when web pages change! Stay ontop of new information!_

+[ ](https://lemonade.changedetection.io/start?src=pip)

-Live your data-life *pro-actively* instead of *re-actively*, do not rely on manipulative social media for consuming important information.

-

-

-

](https://lemonade.changedetection.io/start?src=pip)

-Live your data-life *pro-actively* instead of *re-actively*, do not rely on manipulative social media for consuming important information.

-

-

- -

-

-**Get your own private instance now! Let us host it for you!**

-

-[**Try our $6.99/month subscription - unlimited checks, watches and notifications!**](https://lemonade.changedetection.io/start), choose from different geographical locations, let us handle everything for you.

+[**Don't have time? Let us host it for you! try our extremely affordable subscription use our proxies and support!**](https://lemonade.changedetection.io/start)

#### Example use cases

-Know when ...

-

-- Government department updates (changes are often only on their websites)

-- Local government news (changes are often only on their websites)

+- Products and services have a change in pricing

+- _Out of stock notification_ and _Back In stock notification_

+- Governmental department updates (changes are often only on their websites)

- New software releases, security advisories when you're not on their mailing list.

- Festivals with changes

- Realestate listing changes

+- Know when your favourite whiskey is on sale, or other special deals are announced before anyone else

- COVID related news from government websites

+- University/organisation news from their website

- Detect and monitor changes in JSON API responses

-- API monitoring and alerting

+- JSON API monitoring and alerting

+- Changes in legal and other documents

+- Trigger API calls via notifications when text appears on a website

+- Glue together APIs using the JSON filter and JSON notifications

+- Create RSS feeds based on changes in web content

+- Monitor HTML source code for unexpected changes, strengthen your PCI compliance

+- You have a very sensitive list of URLs to watch and you do _not_ want to use the paid alternatives. (Remember, _you_ are the product)

+

+_Need an actual Chrome runner with Javascript support? We support fetching via WebDriver and Playwright!_

+

+#### Key Features

+

+- Lots of trigger filters, such as "Trigger on text", "Remove text by selector", "Ignore text", "Extract text", also using regular-expressions!

+- Target elements with xPath and CSS Selectors, Easily monitor complex JSON with JSONPath or jq

+- Switch between fast non-JS and Chrome JS based "fetchers"

+- Easily specify how often a site should be checked

+- Execute JS before extracting text (Good for logging in, see examples in the UI!)

+- Override Request Headers, Specify `POST` or `GET` and other methods

+- Use the "Visual Selector" to help target specific elements

-**Get monitoring now!**

```bash

-$ pip3 install changedetection.io

+$ pip3 install changedetection.io

```

Specify a target for the *datastore path* with `-d` (required) and a *listening port* with `-p` (defaults to `5000`)

@@ -51,17 +54,5 @@ $ changedetection.io -d /path/to/empty/data/dir -p 5000

Then visit http://127.0.0.1:5000 , You should now be able to access the UI.

-### Features

-- Website monitoring

-- Change detection of content and analyses

-- Filters on change (Select by CSS or JSON)

-- Triggers (Wait for text, wait for regex)

-- Notification support

-- JSON API Monitoring

-- Parse JSON embedded in HTML

-- (Reverse) Proxy support

-- Javascript support via WebDriver

-- RaspberriPi (arm v6/v7/64 support)

-

See https://github.com/dgtlmoon/changedetection.io for more information.

diff --git a/README.md b/README.md

index 6c33e42e..0f167828 100644

--- a/README.md

+++ b/README.md

@@ -1,34 +1,31 @@

-# changedetection.io

-[![Release Version][release-shield]][release-link] [![Docker Pulls][docker-pulls]][docker-link] [![License][license-shield]](LICENSE.md)

-

-

-

-## Self-Hosted, Open Source, Change Monitoring of Web Pages

-

-_Know when web pages change! Stay ontop of new information!_

+## Web Site Change Detection, Monitoring and Notification.

-Live your data-life *pro-actively* instead of *re-actively*.

+Live your data-life pro-actively, track website content changes and receive notifications via Discord, Email, Slack, Telegram and 70+ more

-Free, Open-source web page monitoring, notification and change detection. Don't have time? [**Try our $6.99/month subscription - unlimited checks and watches!**](https://lemonade.changedetection.io/start)

+[

-

-

-**Get your own private instance now! Let us host it for you!**

-

-[**Try our $6.99/month subscription - unlimited checks, watches and notifications!**](https://lemonade.changedetection.io/start), choose from different geographical locations, let us handle everything for you.

+[**Don't have time? Let us host it for you! try our extremely affordable subscription use our proxies and support!**](https://lemonade.changedetection.io/start)

#### Example use cases

-Know when ...

-

-- Government department updates (changes are often only on their websites)

-- Local government news (changes are often only on their websites)

+- Products and services have a change in pricing

+- _Out of stock notification_ and _Back In stock notification_

+- Governmental department updates (changes are often only on their websites)

- New software releases, security advisories when you're not on their mailing list.

- Festivals with changes

- Realestate listing changes

+- Know when your favourite whiskey is on sale, or other special deals are announced before anyone else

- COVID related news from government websites

+- University/organisation news from their website

- Detect and monitor changes in JSON API responses

-- API monitoring and alerting

+- JSON API monitoring and alerting

+- Changes in legal and other documents

+- Trigger API calls via notifications when text appears on a website

+- Glue together APIs using the JSON filter and JSON notifications

+- Create RSS feeds based on changes in web content

+- Monitor HTML source code for unexpected changes, strengthen your PCI compliance

+- You have a very sensitive list of URLs to watch and you do _not_ want to use the paid alternatives. (Remember, _you_ are the product)

+

+_Need an actual Chrome runner with Javascript support? We support fetching via WebDriver and Playwright!_

+

+#### Key Features

+

+- Lots of trigger filters, such as "Trigger on text", "Remove text by selector", "Ignore text", "Extract text", also using regular-expressions!

+- Target elements with xPath and CSS Selectors, Easily monitor complex JSON with JSONPath or jq

+- Switch between fast non-JS and Chrome JS based "fetchers"

+- Easily specify how often a site should be checked

+- Execute JS before extracting text (Good for logging in, see examples in the UI!)

+- Override Request Headers, Specify `POST` or `GET` and other methods

+- Use the "Visual Selector" to help target specific elements

-**Get monitoring now!**

```bash

-$ pip3 install changedetection.io

+$ pip3 install changedetection.io

```

Specify a target for the *datastore path* with `-d` (required) and a *listening port* with `-p` (defaults to `5000`)

@@ -51,17 +54,5 @@ $ changedetection.io -d /path/to/empty/data/dir -p 5000

Then visit http://127.0.0.1:5000 , You should now be able to access the UI.

-### Features

-- Website monitoring

-- Change detection of content and analyses

-- Filters on change (Select by CSS or JSON)

-- Triggers (Wait for text, wait for regex)

-- Notification support

-- JSON API Monitoring

-- Parse JSON embedded in HTML

-- (Reverse) Proxy support

-- Javascript support via WebDriver

-- RaspberriPi (arm v6/v7/64 support)

-

See https://github.com/dgtlmoon/changedetection.io for more information.

diff --git a/README.md b/README.md

index 6c33e42e..0f167828 100644

--- a/README.md

+++ b/README.md

@@ -1,34 +1,31 @@

-# changedetection.io

-[![Release Version][release-shield]][release-link] [![Docker Pulls][docker-pulls]][docker-link] [![License][license-shield]](LICENSE.md)

-

-

-

-## Self-Hosted, Open Source, Change Monitoring of Web Pages

-

-_Know when web pages change! Stay ontop of new information!_

+## Web Site Change Detection, Monitoring and Notification.

-Live your data-life *pro-actively* instead of *re-actively*.

+Live your data-life pro-actively, track website content changes and receive notifications via Discord, Email, Slack, Telegram and 70+ more

-Free, Open-source web page monitoring, notification and change detection. Don't have time? [**Try our $6.99/month subscription - unlimited checks and watches!**](https://lemonade.changedetection.io/start)

+[ ](https://lemonade.changedetection.io/start?src=github)

+[![Release Version][release-shield]][release-link] [![Docker Pulls][docker-pulls]][docker-link] [![License][license-shield]](LICENSE.md)

-[

](https://lemonade.changedetection.io/start?src=github)

+[![Release Version][release-shield]][release-link] [![Docker Pulls][docker-pulls]][docker-link] [![License][license-shield]](LICENSE.md)

-[ ](https://lemonade.changedetection.io/start)

+

+Know when important content changes, we support notifications via Discord, Telegram, Home-Assistant, Slack, Email and 70+ more

-**Get your own private instance now! Let us host it for you!**

+[**Don't have time? Let us host it for you! try our $6.99/month subscription - use our proxies and support!**](https://lemonade.changedetection.io/start) , _half the price of other website change monitoring services and comes with unlimited watches & checks!_

-[**Try our $6.99/month subscription - unlimited checks and watches!**](https://lemonade.changedetection.io/start) , _half the price of other website change monitoring services and comes with unlimited watches & checks!_

+- Chrome browser included.

+- Super fast, no registration needed setup.

+- Start watching and receiving change notifications instantly.

+Easily see what changed, examine by word, line, or individual character.

-- Automatic Updates, Automatic Backups, No Heroku "paused application", don't miss a change!

-- Javascript browser included

-- Unlimited checks and watches!

+

](https://lemonade.changedetection.io/start)

+

+Know when important content changes, we support notifications via Discord, Telegram, Home-Assistant, Slack, Email and 70+ more

-**Get your own private instance now! Let us host it for you!**

+[**Don't have time? Let us host it for you! try our $6.99/month subscription - use our proxies and support!**](https://lemonade.changedetection.io/start) , _half the price of other website change monitoring services and comes with unlimited watches & checks!_

-[**Try our $6.99/month subscription - unlimited checks and watches!**](https://lemonade.changedetection.io/start) , _half the price of other website change monitoring services and comes with unlimited watches & checks!_

+- Chrome browser included.

+- Super fast, no registration needed setup.

+- Start watching and receiving change notifications instantly.

+Easily see what changed, examine by word, line, or individual character.

-- Automatic Updates, Automatic Backups, No Heroku "paused application", don't miss a change!

-- Javascript browser included

-- Unlimited checks and watches!

+ #### Example use cases

- Products and services have a change in pricing

+- _Out of stock notification_ and _Back In stock notification_

- Governmental department updates (changes are often only on their websites)

- New software releases, security advisories when you're not on their mailing list.

- Festivals with changes

@@ -45,15 +42,22 @@ Free, Open-source web page monitoring, notification and change detection. Don't

- Monitor HTML source code for unexpected changes, strengthen your PCI compliance

- You have a very sensitive list of URLs to watch and you do _not_ want to use the paid alternatives. (Remember, _you_ are the product)

-_Need an actual Chrome runner with Javascript support? We support fetching via WebDriver!_

+_Need an actual Chrome runner with Javascript support? We support fetching via WebDriver and Playwright!_

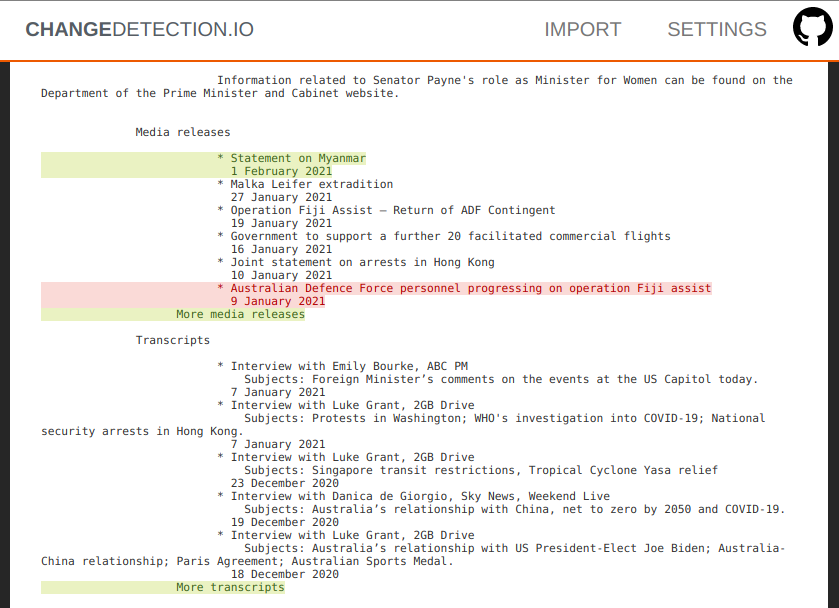

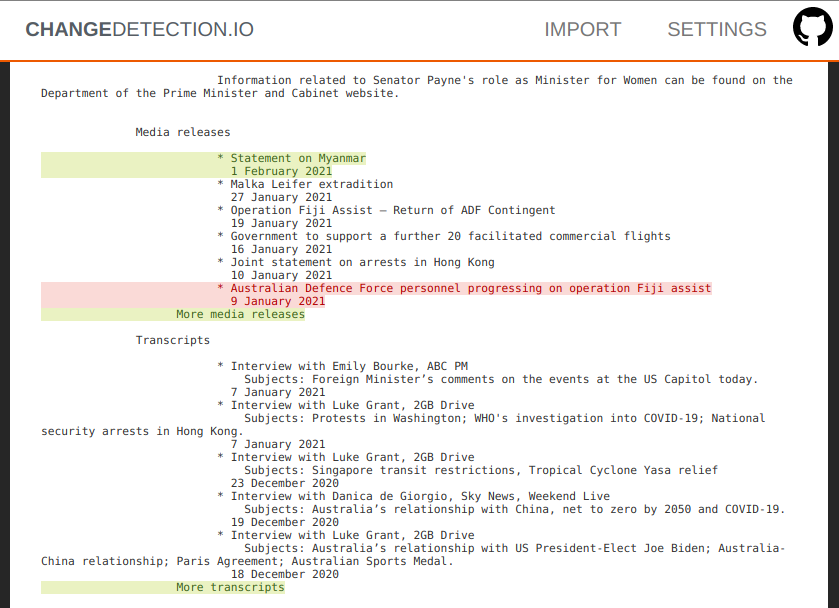

-## Screenshots

+#### Key Features

-### Examine differences in content.

+- Lots of trigger filters, such as "Trigger on text", "Remove text by selector", "Ignore text", "Extract text", also using regular-expressions!

+- Target elements with xPath and CSS Selectors, Easily monitor complex JSON with JSONPath or jq

+- Switch between fast non-JS and Chrome JS based "fetchers"

+- Easily specify how often a site should be checked

+- Execute JS before extracting text (Good for logging in, see examples in the UI!)

+- Override Request Headers, Specify `POST` or `GET` and other methods

+- Use the "Visual Selector" to help target specific elements

+- Configurable [proxy per watch](https://github.com/dgtlmoon/changedetection.io/wiki/Proxy-configuration)

-Easily see what changed, examine by word, line, or individual character.

+We [recommend and use Bright Data](https://brightdata.grsm.io/n0r16zf7eivq) global proxy services, Bright Data will match any first deposit up to $100 using our signup link.

-

#### Example use cases

- Products and services have a change in pricing

+- _Out of stock notification_ and _Back In stock notification_

- Governmental department updates (changes are often only on their websites)

- New software releases, security advisories when you're not on their mailing list.

- Festivals with changes

@@ -45,15 +42,22 @@ Free, Open-source web page monitoring, notification and change detection. Don't

- Monitor HTML source code for unexpected changes, strengthen your PCI compliance

- You have a very sensitive list of URLs to watch and you do _not_ want to use the paid alternatives. (Remember, _you_ are the product)

-_Need an actual Chrome runner with Javascript support? We support fetching via WebDriver!_

+_Need an actual Chrome runner with Javascript support? We support fetching via WebDriver and Playwright!_

-## Screenshots

+#### Key Features

-### Examine differences in content.

+- Lots of trigger filters, such as "Trigger on text", "Remove text by selector", "Ignore text", "Extract text", also using regular-expressions!

+- Target elements with xPath and CSS Selectors, Easily monitor complex JSON with JSONPath or jq

+- Switch between fast non-JS and Chrome JS based "fetchers"

+- Easily specify how often a site should be checked

+- Execute JS before extracting text (Good for logging in, see examples in the UI!)

+- Override Request Headers, Specify `POST` or `GET` and other methods

+- Use the "Visual Selector" to help target specific elements

+- Configurable [proxy per watch](https://github.com/dgtlmoon/changedetection.io/wiki/Proxy-configuration)

-Easily see what changed, examine by word, line, or individual character.

+We [recommend and use Bright Data](https://brightdata.grsm.io/n0r16zf7eivq) global proxy services, Bright Data will match any first deposit up to $100 using our signup link.

- +## Screenshots

Please :star: star :star: this project and help it grow! https://github.com/dgtlmoon/changedetection.io/

@@ -117,8 +121,8 @@ See the wiki for more information https://github.com/dgtlmoon/changedetection.io

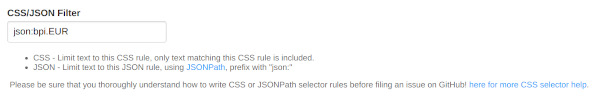

## Filters

-XPath, JSONPath and CSS support comes baked in! You can be as specific as you need, use XPath exported from various XPath element query creation tools.

+XPath, JSONPath, jq, and CSS support comes baked in! You can be as specific as you need, use XPath exported from various XPath element query creation tools.

(We support LXML `re:test`, `re:math` and `re:replace`.)

## Notifications

@@ -147,7 +151,7 @@ Now you can also customise your notification content!

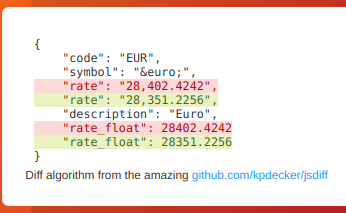

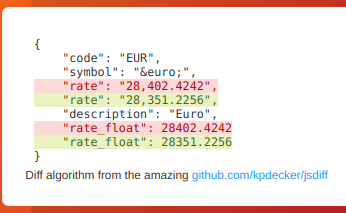

## JSON API Monitoring

-Detect changes and monitor data in JSON API's by using the built-in JSONPath selectors as a filter / selector.

+Detect changes and monitor data in JSON API's by using either JSONPath or jq to filter, parse, and restructure JSON as needed.

@@ -155,9 +159,20 @@ This will re-parse the JSON and apply formatting to the text, making it super ea

+### JSONPath or jq?

+

+For more complex parsing, filtering, and modifying of JSON data, jq is recommended due to the built-in operators and functions. Refer to the [documentation](https://stedolan.github.io/jq/manual/) for more specifc information on jq.

+

+One big advantage of `jq` is that you can use logic in your JSON filter, such as filters to only show items that have a value greater than/less than etc.

+

+See the wiki https://github.com/dgtlmoon/changedetection.io/wiki/JSON-Selector-Filter-help for more information and examples

+

+Note: `jq` library must be added separately (`pip3 install jq`)

+

+

### Parse JSON embedded in HTML!

-When you enable a `json:` filter, you can even automatically extract and parse embedded JSON inside a HTML page! Amazingly handy for sites that build content based on JSON, such as many e-commerce websites.

+When you enable a `json:` or `jq:` filter, you can even automatically extract and parse embedded JSON inside a HTML page! Amazingly handy for sites that build content based on JSON, such as many e-commerce websites.

```

@@ -167,7 +182,7 @@ When you enable a `json:` filter, you can even automatically extract and parse e

```

-`json:$.price` would give `23.50`, or you can extract the whole structure

+`json:$.price` or `jq:.price` would give `23.50`, or you can extract the whole structure

## Proxy configuration

diff --git a/changedetection.py b/changedetection.py

index 9e76cc8c..8455315a 100755

--- a/changedetection.py

+++ b/changedetection.py

@@ -6,6 +6,36 @@

# Read more https://github.com/dgtlmoon/changedetection.io/wiki

from changedetectionio import changedetection

+import multiprocessing

+import signal

+import os

+

+def sigchld_handler(_signo, _stack_frame):

+ import sys

+ print('Shutdown: Got SIGCHLD')

+ # https://stackoverflow.com/questions/40453496/python-multiprocessing-capturing-signals-to-restart-child-processes-or-shut-do

+ pid, status = os.waitpid(-1, os.WNOHANG | os.WUNTRACED | os.WCONTINUED)

+

+ print('Sub-process: pid %d status %d' % (pid, status))

+ if status != 0:

+ sys.exit(1)

+

+ raise SystemExit

if __name__ == '__main__':

- changedetection.main()

+

+ #signal.signal(signal.SIGCHLD, sigchld_handler)

+

+ # The only way I could find to get Flask to shutdown, is to wrap it and then rely on the subsystem issuing SIGTERM/SIGKILL

+ parse_process = multiprocessing.Process(target=changedetection.main)

+ parse_process.daemon = True

+ parse_process.start()

+ import time

+

+ try:

+ while True:

+ time.sleep(1)

+

+ except KeyboardInterrupt:

+ #parse_process.terminate() not needed, because this process will issue it to the sub-process anyway

+ print ("Exited - CTRL+C")

diff --git a/changedetectionio/.gitignore b/changedetectionio/.gitignore

index 1d463784..0d3c1d4e 100644

--- a/changedetectionio/.gitignore

+++ b/changedetectionio/.gitignore

@@ -1 +1,2 @@

test-datastore

+package-lock.json

diff --git a/changedetectionio/__init__.py b/changedetectionio/__init__.py

index 2b10ace3..c6f95f1e 100644

--- a/changedetectionio/__init__.py

+++ b/changedetectionio/__init__.py

@@ -1,16 +1,5 @@

#!/usr/bin/python3

-

-# @todo logging

-# @todo extra options for url like , verify=False etc.

-# @todo enable https://urllib3.readthedocs.io/en/latest/user-guide.html#ssl as option?

-# @todo option for interval day/6 hour/etc

-# @todo on change detected, config for calling some API

-# @todo fetch title into json

-# https://distill.io/features

-# proxy per check

-# - flask_cors, itsdangerous,MarkupSafe

-

import datetime

import os

import queue

@@ -44,7 +33,7 @@ from flask_wtf import CSRFProtect

from changedetectionio import html_tools

from changedetectionio.api import api_v1

-__version__ = '0.39.16'

+__version__ = '0.39.20.4'

datastore = None

@@ -54,7 +43,7 @@ ticker_thread = None

extra_stylesheets = []

-update_q = queue.Queue()

+update_q = queue.PriorityQueue()

notification_q = queue.Queue()

@@ -76,7 +65,7 @@ app.config['LOGIN_DISABLED'] = False

# Disables caching of the templates

app.config['TEMPLATES_AUTO_RELOAD'] = True

-

+app.jinja_env.add_extension('jinja2.ext.loopcontrols')

csrf = CSRFProtect()

csrf.init_app(app)

@@ -115,18 +104,19 @@ def _jinja2_filter_datetime(watch_obj, format="%Y-%m-%d %H:%M:%S"):

return timeago.format(int(watch_obj['last_checked']), time.time())

-

-# @app.context_processor

-# def timeago():

-# def _timeago(lower_time, now):

-# return timeago.format(lower_time, now)

-# return dict(timeago=_timeago)

-

@app.template_filter('format_timestamp_timeago')

def _jinja2_filter_datetimestamp(timestamp, format="%Y-%m-%d %H:%M:%S"):

+ if timestamp == False:

+ return 'Not yet'

+

return timeago.format(timestamp, time.time())

- # return timeago.format(timestamp, time.time())

- # return datetime.datetime.utcfromtimestamp(timestamp).strftime(format)

+

+@app.template_filter('format_seconds_ago')

+def _jinja2_filter_seconds_precise(timestamp):

+ if timestamp == False:

+ return 'Not yet'

+

+ return format(int(time.time()-timestamp), ',d')

# When nobody is logged in Flask-Login's current_user is set to an AnonymousUser object.

class User(flask_login.UserMixin):

@@ -313,7 +303,7 @@ def changedetection_app(config=None, datastore_o=None):

watch['uuid'] = uuid

sorted_watches.append(watch)

- sorted_watches.sort(key=lambda x: x['last_changed'], reverse=True)

+ sorted_watches.sort(key=lambda x: x.last_changed, reverse=False)

fg = FeedGenerator()

fg.title('changedetection.io')

@@ -332,7 +322,7 @@ def changedetection_app(config=None, datastore_o=None):

if not watch.viewed:

# Re #239 - GUID needs to be individual for each event

# @todo In the future make this a configurable link back (see work on BASE_URL https://github.com/dgtlmoon/changedetection.io/pull/228)

- guid = "{}/{}".format(watch['uuid'], watch['last_changed'])

+ guid = "{}/{}".format(watch['uuid'], watch.last_changed)

fe = fg.add_entry()

# Include a link to the diff page, they will have to login here to see if password protection is enabled.

@@ -370,20 +360,20 @@ def changedetection_app(config=None, datastore_o=None):

from changedetectionio import forms

limit_tag = request.args.get('tag')

- pause_uuid = request.args.get('pause')

-

# Redirect for the old rss path which used the /?rss=true

if request.args.get('rss'):

return redirect(url_for('rss', tag=limit_tag))

- if pause_uuid:

- try:

- datastore.data['watching'][pause_uuid]['paused'] ^= True

- datastore.needs_write = True

+ op = request.args.get('op')

+ if op:

+ uuid = request.args.get('uuid')

+ if op == 'pause':

+ datastore.data['watching'][uuid]['paused'] ^= True

+ elif op == 'mute':

+ datastore.data['watching'][uuid]['notification_muted'] ^= True

- return redirect(url_for('index', tag = limit_tag))

- except KeyError:

- pass

+ datastore.needs_write = True

+ return redirect(url_for('index', tag = limit_tag))

# Sort by last_changed and add the uuid which is usually the key..

sorted_watches = []

@@ -406,7 +396,6 @@ def changedetection_app(config=None, datastore_o=None):

existing_tags = datastore.get_all_tags()

form = forms.quickWatchForm(request.form)

-

output = render_template("watch-overview.html",

form=form,

watches=sorted_watches,

@@ -417,7 +406,7 @@ def changedetection_app(config=None, datastore_o=None):

# Don't link to hosting when we're on the hosting environment

hosted_sticky=os.getenv("SALTED_PASS", False) == False,

guid=datastore.data['app_guid'],

- queued_uuids=update_q.queue)

+ queued_uuids=[uuid for p,uuid in update_q.queue])

if session.get('share-link'):

@@ -503,7 +492,7 @@ def changedetection_app(config=None, datastore_o=None):

from changedetectionio import fetch_site_status

# Get the most recent one

- newest_history_key = datastore.get_val(uuid, 'newest_history_key')

+ newest_history_key = datastore.data['watching'][uuid].get('newest_history_key')

# 0 means that theres only one, so that there should be no 'unviewed' history available

if newest_history_key == 0:

@@ -552,16 +541,13 @@ def changedetection_app(config=None, datastore_o=None):

# be sure we update with a copy instead of accidently editing the live object by reference

default = deepcopy(datastore.data['watching'][uuid])

- # Show system wide default if nothing configured

- if datastore.data['watching'][uuid]['fetch_backend'] is None:

- default['fetch_backend'] = datastore.data['settings']['application']['fetch_backend']

-

# Show system wide default if nothing configured

if all(value == 0 or value == None for value in datastore.data['watching'][uuid]['time_between_check'].values()):

default['time_between_check'] = deepcopy(datastore.data['settings']['requests']['time_between_check'])

# Defaults for proxy choice

if datastore.proxy_list is not None: # When enabled

+ # @todo

# Radio needs '' not None, or incase that the chosen one no longer exists

if default['proxy'] is None or not any(default['proxy'] in tup for tup in datastore.proxy_list):

default['proxy'] = ''

@@ -575,11 +561,17 @@ def changedetection_app(config=None, datastore_o=None):

# @todo - Couldn't get setattr() etc dynamic addition working, so remove it instead

del form.proxy

else:

- form.proxy.choices = [('', 'Default')] + datastore.proxy_list

+ form.proxy.choices = [('', 'Default')]

+ for p in datastore.proxy_list:

+ form.proxy.choices.append(tuple((p, datastore.proxy_list[p]['label'])))

+

if request.method == 'POST' and form.validate():

extra_update_obj = {}

+ if request.args.get('unpause_on_save'):

+ extra_update_obj['paused'] = False

+

# Re #110, if they submit the same as the default value, set it to None, so we continue to follow the default

# Assume we use the default value, unless something relevant is different, then use the form value

# values could be None, 0 etc.

@@ -595,10 +587,8 @@ def changedetection_app(config=None, datastore_o=None):

if form.fetch_backend.data == datastore.data['settings']['application']['fetch_backend']:

extra_update_obj['fetch_backend'] = None

- # Notification URLs

- datastore.data['watching'][uuid]['notification_urls'] = form.notification_urls.data

- # Ignore text

+ # Ignore text

form_ignore_text = form.ignore_text.data

datastore.data['watching'][uuid]['ignore_text'] = form_ignore_text

@@ -619,24 +609,23 @@ def changedetection_app(config=None, datastore_o=None):

datastore.data['watching'][uuid].update(form.data)

datastore.data['watching'][uuid].update(extra_update_obj)

- flash("Updated watch.")

+ if request.args.get('unpause_on_save'):

+ flash("Updated watch - unpaused!.")

+ else:

+ flash("Updated watch.")

# Re #286 - We wait for syncing new data to disk in another thread every 60 seconds

# But in the case something is added we should save straight away

datastore.needs_write_urgent = True

- # Queue the watch for immediate recheck

- update_q.put(uuid)

+ # Queue the watch for immediate recheck, with a higher priority

+ update_q.put((1, uuid))

# Diff page [edit] link should go back to diff page

- if request.args.get("next") and request.args.get("next") == 'diff' and not form.save_and_preview_button.data:

+ if request.args.get("next") and request.args.get("next") == 'diff':

return redirect(url_for('diff_history_page', uuid=uuid))

- else:

- if form.save_and_preview_button.data:

- flash('You may need to reload this page to see the new content.')

- return redirect(url_for('preview_page', uuid=uuid))

- else:

- return redirect(url_for('index'))

+

+ return redirect(url_for('index'))

else:

if request.method == 'POST' and not form.validate():

@@ -647,18 +636,27 @@ def changedetection_app(config=None, datastore_o=None):

# Only works reliably with Playwright

visualselector_enabled = os.getenv('PLAYWRIGHT_DRIVER_URL', False) and default['fetch_backend'] == 'html_webdriver'

+ # JQ is difficult to install on windows and must be manually added (outside requirements.txt)

+ jq_support = True

+ try:

+ import jq

+ except ModuleNotFoundError:

+ jq_support = False

output = render_template("edit.html",

- uuid=uuid,

- watch=datastore.data['watching'][uuid],

+ current_base_url=datastore.data['settings']['application']['base_url'],

+ emailprefix=os.getenv('NOTIFICATION_MAIL_BUTTON_PREFIX', False),

form=form,

+ has_default_notification_urls=True if len(datastore.data['settings']['application']['notification_urls']) else False,

has_empty_checktime=using_default_check_time,

+ jq_support=jq_support,

+ playwright_enabled=os.getenv('PLAYWRIGHT_DRIVER_URL', False),

+ settings_application=datastore.data['settings']['application'],

using_global_webdriver_wait=default['webdriver_delay'] is None,

- current_base_url=datastore.data['settings']['application']['base_url'],

- emailprefix=os.getenv('NOTIFICATION_MAIL_BUTTON_PREFIX', False),

+ uuid=uuid,

visualselector_data_is_ready=visualselector_data_is_ready,

visualselector_enabled=visualselector_enabled,

- playwright_enabled=os.getenv('PLAYWRIGHT_DRIVER_URL', False)

+ watch=datastore.data['watching'][uuid],

)

return output

@@ -670,26 +668,34 @@ def changedetection_app(config=None, datastore_o=None):

default = deepcopy(datastore.data['settings'])

if datastore.proxy_list is not None:

+ available_proxies = list(datastore.proxy_list.keys())

# When enabled

system_proxy = datastore.data['settings']['requests']['proxy']

# In the case it doesnt exist anymore

- if not any([system_proxy in tup for tup in datastore.proxy_list]):

+ if not system_proxy in available_proxies:

system_proxy = None

- default['requests']['proxy'] = system_proxy if system_proxy is not None else datastore.proxy_list[0][0]

+ default['requests']['proxy'] = system_proxy if system_proxy is not None else available_proxies[0]

# Used by the form handler to keep or remove the proxy settings

- default['proxy_list'] = datastore.proxy_list

+ default['proxy_list'] = available_proxies[0]

# Don't use form.data on POST so that it doesnt overrid the checkbox status from the POST status

form = forms.globalSettingsForm(formdata=request.form if request.method == 'POST' else None,

data=default

)

+

+ # Remove the last option 'System default'

+ form.application.form.notification_format.choices.pop()

+

if datastore.proxy_list is None:

# @todo - Couldn't get setattr() etc dynamic addition working, so remove it instead

del form.requests.form.proxy

else:

- form.requests.form.proxy.choices = datastore.proxy_list

+ form.requests.form.proxy.choices = []

+ for p in datastore.proxy_list:

+ form.requests.form.proxy.choices.append(tuple((p, datastore.proxy_list[p]['label'])))

+

if request.method == 'POST':

# Password unset is a GET, but we can lock the session to a salted env password to always need the password

@@ -702,7 +708,14 @@ def changedetection_app(config=None, datastore_o=None):

return redirect(url_for('settings_page'))

if form.validate():

- datastore.data['settings']['application'].update(form.data['application'])

+ # Don't set password to False when a password is set - should be only removed with the `removepassword` button

+ app_update = dict(deepcopy(form.data['application']))

+

+ # Never update password with '' or False (Added by wtforms when not in submission)

+ if 'password' in app_update and not app_update['password']:

+ del (app_update['password'])

+

+ datastore.data['settings']['application'].update(app_update)

datastore.data['settings']['requests'].update(form.data['requests'])

if not os.getenv("SALTED_PASS", False) and len(form.application.form.password.encrypted_password):

@@ -723,7 +736,8 @@ def changedetection_app(config=None, datastore_o=None):

current_base_url = datastore.data['settings']['application']['base_url'],

hide_remove_pass=os.getenv("SALTED_PASS", False),

api_key=datastore.data['settings']['application'].get('api_access_token'),

- emailprefix=os.getenv('NOTIFICATION_MAIL_BUTTON_PREFIX', False))

+ emailprefix=os.getenv('NOTIFICATION_MAIL_BUTTON_PREFIX', False),

+ settings_application=datastore.data['settings']['application'])

return output

@@ -740,7 +754,7 @@ def changedetection_app(config=None, datastore_o=None):

importer = import_url_list()

importer.run(data=request.values.get('urls'), flash=flash, datastore=datastore)

for uuid in importer.new_uuids:

- update_q.put(uuid)

+ update_q.put((1, uuid))

if len(importer.remaining_data) == 0:

return redirect(url_for('index'))

@@ -753,7 +767,7 @@ def changedetection_app(config=None, datastore_o=None):

d_importer = import_distill_io_json()

d_importer.run(data=request.values.get('distill-io'), flash=flash, datastore=datastore)

for uuid in d_importer.new_uuids:

- update_q.put(uuid)

+ update_q.put((1, uuid))

@@ -802,8 +816,10 @@ def changedetection_app(config=None, datastore_o=None):

newest_file = history[dates[-1]]

+ # Read as binary and force decode as UTF-8

+ # Windows may fail decode in python if we just use 'r' mode (chardet decode exception)

try:

- with open(newest_file, 'r') as f:

+ with open(newest_file, 'r', encoding='utf-8', errors='ignore') as f:

newest_version_file_contents = f.read()

except Exception as e:

newest_version_file_contents = "Unable to read {}.\n".format(newest_file)

@@ -816,13 +832,13 @@ def changedetection_app(config=None, datastore_o=None):

previous_file = history[dates[-2]]

try:

- with open(previous_file, 'r') as f:

+ with open(previous_file, 'r', encoding='utf-8', errors='ignore') as f:

previous_version_file_contents = f.read()

except Exception as e:

previous_version_file_contents = "Unable to read {}.\n".format(previous_file)

- screenshot_url = datastore.get_screenshot(uuid)

+ screenshot_url = watch.get_screenshot()

system_uses_webdriver = datastore.data['settings']['application']['fetch_backend'] == 'html_webdriver'

@@ -842,7 +858,11 @@ def changedetection_app(config=None, datastore_o=None):

extra_title=" - Diff - {}".format(watch['title'] if watch['title'] else watch['url']),

left_sticky=True,

screenshot=screenshot_url,

- is_html_webdriver=is_html_webdriver)

+ is_html_webdriver=is_html_webdriver,

+ last_error=watch['last_error'],

+ last_error_text=watch.get_error_text(),

+ last_error_screenshot=watch.get_error_snapshot()

+ )

return output

@@ -857,73 +877,82 @@ def changedetection_app(config=None, datastore_o=None):

if uuid == 'first':

uuid = list(datastore.data['watching'].keys()).pop()

- # Normally you would never reach this, because the 'preview' button is not available when there's no history

- # However they may try to clear snapshots and reload the page

- if datastore.data['watching'][uuid].history_n == 0:

- flash("Preview unavailable - No fetch/check completed or triggers not reached", "error")

- return redirect(url_for('index'))

-

- extra_stylesheets = [url_for('static_content', group='styles', filename='diff.css')]

-

try:

watch = datastore.data['watching'][uuid]

except KeyError:

flash("No history found for the specified link, bad link?", "error")

return redirect(url_for('index'))

- if watch.history_n >0:

- timestamps = sorted(watch.history.keys(), key=lambda x: int(x))

- filename = watch.history[timestamps[-1]]

- try:

- with open(filename, 'r') as f:

- tmp = f.readlines()

-

- # Get what needs to be highlighted

- ignore_rules = watch.get('ignore_text', []) + datastore.data['settings']['application']['global_ignore_text']

-

- # .readlines will keep the \n, but we will parse it here again, in the future tidy this up

- ignored_line_numbers = html_tools.strip_ignore_text(content="".join(tmp),

- wordlist=ignore_rules,

- mode='line numbers'

- )

-

- trigger_line_numbers = html_tools.strip_ignore_text(content="".join(tmp),

- wordlist=watch['trigger_text'],

- mode='line numbers'

- )

- # Prepare the classes and lines used in the template

- i=0

- for l in tmp:

- classes=[]

- i+=1

- if i in ignored_line_numbers:

- classes.append('ignored')

- if i in trigger_line_numbers:

- classes.append('triggered')

- content.append({'line': l, 'classes': ' '.join(classes)})

-

-

- except Exception as e:

- content.append({'line': "File doesnt exist or unable to read file {}".format(filename), 'classes': ''})

- else:

- content.append({'line': "No history found", 'classes': ''})

-

- screenshot_url = datastore.get_screenshot(uuid)

system_uses_webdriver = datastore.data['settings']['application']['fetch_backend'] == 'html_webdriver'

+ extra_stylesheets = [url_for('static_content', group='styles', filename='diff.css')]

+

is_html_webdriver = True if watch.get('fetch_backend') == 'html_webdriver' or (

watch.get('fetch_backend', None) is None and system_uses_webdriver) else False

+ # Never requested successfully, but we detected a fetch error

+ if datastore.data['watching'][uuid].history_n == 0 and (watch.get_error_text() or watch.get_error_snapshot()):

+ flash("Preview unavailable - No fetch/check completed or triggers not reached", "error")

+ output = render_template("preview.html",

+ content=content,

+ history_n=watch.history_n,

+ extra_stylesheets=extra_stylesheets,

+# current_diff_url=watch['url'],

+ watch=watch,

+ uuid=uuid,

+ is_html_webdriver=is_html_webdriver,

+ last_error=watch['last_error'],

+ last_error_text=watch.get_error_text(),

+ last_error_screenshot=watch.get_error_snapshot())

+ return output

+

+ timestamp = list(watch.history.keys())[-1]

+ filename = watch.history[timestamp]

+ try:

+ with open(filename, 'r', encoding='utf-8', errors='ignore') as f:

+ tmp = f.readlines()

+

+ # Get what needs to be highlighted

+ ignore_rules = watch.get('ignore_text', []) + datastore.data['settings']['application']['global_ignore_text']

+

+ # .readlines will keep the \n, but we will parse it here again, in the future tidy this up

+ ignored_line_numbers = html_tools.strip_ignore_text(content="".join(tmp),

+ wordlist=ignore_rules,

+ mode='line numbers'

+ )

+

+ trigger_line_numbers = html_tools.strip_ignore_text(content="".join(tmp),

+ wordlist=watch['trigger_text'],

+ mode='line numbers'

+ )

+ # Prepare the classes and lines used in the template

+ i=0

+ for l in tmp:

+ classes=[]

+ i+=1

+ if i in ignored_line_numbers:

+ classes.append('ignored')

+ if i in trigger_line_numbers:

+ classes.append('triggered')

+ content.append({'line': l, 'classes': ' '.join(classes)})

+

+ except Exception as e:

+ content.append({'line': "File doesnt exist or unable to read file {}".format(filename), 'classes': ''})

+

output = render_template("preview.html",

content=content,

+ history_n=watch.history_n,

extra_stylesheets=extra_stylesheets,

ignored_line_numbers=ignored_line_numbers,

triggered_line_numbers=trigger_line_numbers,

current_diff_url=watch['url'],

- screenshot=screenshot_url,

+ screenshot=watch.get_screenshot(),

watch=watch,

uuid=uuid,

- is_html_webdriver=is_html_webdriver)

+ is_html_webdriver=is_html_webdriver,

+ last_error=watch['last_error'],

+ last_error_text=watch.get_error_text(),

+ last_error_screenshot=watch.get_error_snapshot())

return output

@@ -1023,11 +1052,12 @@ def changedetection_app(config=None, datastore_o=None):

if datastore.data['settings']['application']['password'] and not flask_login.current_user.is_authenticated:

abort(403)

+ screenshot_filename = "last-screenshot.png" if not request.args.get('error_screenshot') else "last-error-screenshot.png"

+

# These files should be in our subdirectory

try:

# set nocache, set content-type

- watch_dir = datastore_o.datastore_path + "/" + filename

- response = make_response(send_from_directory(filename="last-screenshot.png", directory=watch_dir, path=watch_dir + "/last-screenshot.png"))

+ response = make_response(send_from_directory(os.path.join(datastore_o.datastore_path, filename), screenshot_filename))

response.headers['Content-type'] = 'image/png'

response.headers['Cache-Control'] = 'no-cache, no-store, must-revalidate'

response.headers['Pragma'] = 'no-cache'

@@ -1063,9 +1093,9 @@ def changedetection_app(config=None, datastore_o=None):

except FileNotFoundError:

abort(404)

- @app.route("/api/add", methods=['POST'])

+ @app.route("/form/add/quickwatch", methods=['POST'])

@login_required

- def form_watch_add():

+ def form_quick_watch_add():

from changedetectionio import forms

form = forms.quickWatchForm(request.form)

@@ -1078,13 +1108,19 @@ def changedetection_app(config=None, datastore_o=None):

flash('The URL {} already exists'.format(url), "error")

return redirect(url_for('index'))

- # @todo add_watch should throw a custom Exception for validation etc

- new_uuid = datastore.add_watch(url=url, tag=request.form.get('tag').strip())

- if new_uuid:

+ add_paused = request.form.get('edit_and_watch_submit_button') != None

+ new_uuid = datastore.add_watch(url=url, tag=request.form.get('tag').strip(), extras={'paused': add_paused})

+

+

+ if not add_paused and new_uuid:

# Straight into the queue.

- update_q.put(new_uuid)

+ update_q.put((1, new_uuid))

flash("Watch added.")

+ if add_paused:

+ flash('Watch added in Paused state, saving will unpause.')

+ return redirect(url_for('edit_page', uuid=new_uuid, unpause_on_save=1))

+

return redirect(url_for('index'))

@@ -1115,7 +1151,7 @@ def changedetection_app(config=None, datastore_o=None):

uuid = list(datastore.data['watching'].keys()).pop()

new_uuid = datastore.clone(uuid)

- update_q.put(new_uuid)

+ update_q.put((5, new_uuid))

flash('Cloned.')

return redirect(url_for('index'))

@@ -1136,7 +1172,7 @@ def changedetection_app(config=None, datastore_o=None):

if uuid:

if uuid not in running_uuids:

- update_q.put(uuid)

+ update_q.put((1, uuid))

i = 1

elif tag != None:

@@ -1144,7 +1180,7 @@ def changedetection_app(config=None, datastore_o=None):

for watch_uuid, watch in datastore.data['watching'].items():

if (tag != None and tag in watch['tag']):

if watch_uuid not in running_uuids and not datastore.data['watching'][watch_uuid]['paused']:

- update_q.put(watch_uuid)

+ update_q.put((1, watch_uuid))

i += 1

else:

@@ -1152,11 +1188,68 @@ def changedetection_app(config=None, datastore_o=None):

for watch_uuid, watch in datastore.data['watching'].items():

if watch_uuid not in running_uuids and not datastore.data['watching'][watch_uuid]['paused']:

- update_q.put(watch_uuid)

+ update_q.put((1, watch_uuid))

i += 1

flash("{} watches are queued for rechecking.".format(i))

return redirect(url_for('index', tag=tag))

+ @app.route("/form/checkbox-operations", methods=['POST'])

+ @login_required

+ def form_watch_list_checkbox_operations():

+ op = request.form['op']

+ uuids = request.form.getlist('uuids')

+

+ if (op == 'delete'):

+ for uuid in uuids:

+ uuid = uuid.strip()

+ if datastore.data['watching'].get(uuid):

+ datastore.delete(uuid.strip())

+ flash("{} watches deleted".format(len(uuids)))

+

+ elif (op == 'pause'):

+ for uuid in uuids:

+ uuid = uuid.strip()

+ if datastore.data['watching'].get(uuid):

+ datastore.data['watching'][uuid.strip()]['paused'] = True

+

+ flash("{} watches paused".format(len(uuids)))

+

+ elif (op == 'unpause'):

+ for uuid in uuids:

+ uuid = uuid.strip()

+ if datastore.data['watching'].get(uuid):

+ datastore.data['watching'][uuid.strip()]['paused'] = False

+ flash("{} watches unpaused".format(len(uuids)))

+

+ elif (op == 'mute'):

+ for uuid in uuids:

+ uuid = uuid.strip()

+ if datastore.data['watching'].get(uuid):

+ datastore.data['watching'][uuid.strip()]['notification_muted'] = True

+ flash("{} watches muted".format(len(uuids)))

+

+ elif (op == 'unmute'):

+ for uuid in uuids:

+ uuid = uuid.strip()

+ if datastore.data['watching'].get(uuid):

+ datastore.data['watching'][uuid.strip()]['notification_muted'] = False

+ flash("{} watches un-muted".format(len(uuids)))

+

+ elif (op == 'notification-default'):

+ from changedetectionio.notification import (

+ default_notification_format_for_watch

+ )

+ for uuid in uuids:

+ uuid = uuid.strip()

+ if datastore.data['watching'].get(uuid):

+ datastore.data['watching'][uuid.strip()]['notification_title'] = None

+ datastore.data['watching'][uuid.strip()]['notification_body'] = None

+ datastore.data['watching'][uuid.strip()]['notification_urls'] = []

+ datastore.data['watching'][uuid.strip()]['notification_format'] = default_notification_format_for_watch

+ flash("{} watches set to use default notification settings".format(len(uuids)))

+

+ return redirect(url_for('index'))

+

@app.route("/api/share-url", methods=['GET'])

@login_required

def form_share_put_watch():

@@ -1254,7 +1347,6 @@ def notification_runner():

global notification_debug_log

from datetime import datetime

import json

-

while not app.config.exit.is_set():

try:

# At the moment only one thread runs (single runner)

@@ -1293,6 +1385,8 @@ def ticker_thread_check_time_launch_checks():

import random

from changedetectionio import update_worker

+ proxy_last_called_time = {}

+

recheck_time_minimum_seconds = int(os.getenv('MINIMUM_SECONDS_RECHECK_TIME', 20))

print("System env MINIMUM_SECONDS_RECHECK_TIME", recheck_time_minimum_seconds)

@@ -1353,17 +1447,42 @@ def ticker_thread_check_time_launch_checks():

if watch.jitter_seconds == 0:

watch.jitter_seconds = random.uniform(-abs(jitter), jitter)

-

seconds_since_last_recheck = now - watch['last_checked']

+

if seconds_since_last_recheck >= (threshold + watch.jitter_seconds) and seconds_since_last_recheck >= recheck_time_minimum_seconds:

- if not uuid in running_uuids and uuid not in update_q.queue:

- print("Queued watch UUID {} last checked at {} queued at {:0.2f} jitter {:0.2f}s, {:0.2f}s since last checked".format(uuid,

- watch['last_checked'],

- now,

- watch.jitter_seconds,

- now - watch['last_checked']))

+ if not uuid in running_uuids and uuid not in [q_uuid for p,q_uuid in update_q.queue]:

+

+ # Proxies can be set to have a limit on seconds between which they can be called

+ watch_proxy = datastore.get_preferred_proxy_for_watch(uuid=uuid)

+ if watch_proxy and watch_proxy in list(datastore.proxy_list.keys()):

+ # Proxy may also have some threshold minimum

+ proxy_list_reuse_time_minimum = int(datastore.proxy_list.get(watch_proxy, {}).get('reuse_time_minimum', 0))

+ if proxy_list_reuse_time_minimum:

+ proxy_last_used_time = proxy_last_called_time.get(watch_proxy, 0)

+ time_since_proxy_used = int(time.time() - proxy_last_used_time)

+ if time_since_proxy_used < proxy_list_reuse_time_minimum:

+ # Not enough time difference reached, skip this watch

+ print("> Skipped UUID {} using proxy '{}', not enough time between proxy requests {}s/{}s".format(uuid,

+ watch_proxy,

+ time_since_proxy_used,

+ proxy_list_reuse_time_minimum))

+ continue

+ else:

+ # Record the last used time

+ proxy_last_called_time[watch_proxy] = int(time.time())

+

+ # Use Epoch time as priority, so we get a "sorted" PriorityQueue, but we can still push a priority 1 into it.

+ priority = int(time.time())

+ print(

+ "> Queued watch UUID {} last checked at {} queued at {:0.2f} priority {} jitter {:0.2f}s, {:0.2f}s since last checked".format(

+ uuid,

+ watch['last_checked'],

+ now,

+ priority,

+ watch.jitter_seconds,

+ now - watch['last_checked']))

# Into the queue with you

- update_q.put(uuid)

+ update_q.put((priority, uuid))

# Reset for next time

watch.jitter_seconds = 0

diff --git a/changedetectionio/api/api_v1.py b/changedetectionio/api/api_v1.py

index d61e93c0..a432bc67 100644

--- a/changedetectionio/api/api_v1.py

+++ b/changedetectionio/api/api_v1.py

@@ -24,7 +24,7 @@ class Watch(Resource):

abort(404, message='No watch exists with the UUID of {}'.format(uuid))

if request.args.get('recheck'):

- self.update_q.put(uuid)

+ self.update_q.put((1, uuid))

return "OK", 200

# Return without history, get that via another API call

@@ -100,7 +100,7 @@ class CreateWatch(Resource):

extras = {'title': json_data['title'].strip()} if json_data.get('title') else {}

new_uuid = self.datastore.add_watch(url=json_data['url'].strip(), tag=tag, extras=extras)

- self.update_q.put(new_uuid)

+ self.update_q.put((1, new_uuid))

return {'uuid': new_uuid}, 201

# Return concise list of available watches and some very basic info

@@ -113,12 +113,12 @@ class CreateWatch(Resource):

list[k] = {'url': v['url'],

'title': v['title'],

'last_checked': v['last_checked'],

- 'last_changed': v['last_changed'],

+ 'last_changed': v.last_changed,

'last_error': v['last_error']}

if request.args.get('recheck_all'):

for uuid in self.datastore.data['watching'].keys():

- self.update_q.put(uuid)

+ self.update_q.put((1, uuid))

return {'status': "OK"}, 200

return list, 200

diff --git a/changedetectionio/changedetection.py b/changedetectionio/changedetection.py

index 2adf5ffc..461476e1 100755

--- a/changedetectionio/changedetection.py

+++ b/changedetectionio/changedetection.py

@@ -4,6 +4,7 @@

import getopt

import os

+import signal

import sys

import eventlet

@@ -11,7 +12,21 @@ import eventlet.wsgi

from . import store, changedetection_app, content_fetcher

from . import __version__

+# Only global so we can access it in the signal handler

+datastore = None

+app = None

+

+def sigterm_handler(_signo, _stack_frame):

+ global app

+ global datastore

+# app.config.exit.set()

+ print('Shutdown: Got SIGTERM, DB saved to disk')

+ datastore.sync_to_json()

+# raise SystemExit

+

def main():

+ global datastore

+ global app

ssl_mode = False

host = ''

port = os.environ.get('PORT') or 5000

@@ -35,11 +50,6 @@ def main():

create_datastore_dir = False

for opt, arg in opts:

- # if opt == '--clear-all-history':

- # Remove history, the actual files you need to delete manually.

- # for uuid, watch in datastore.data['watching'].items():

- # watch.update({'history': {}, 'last_checked': 0, 'last_changed': 0, 'previous_md5': None})

-

if opt == '-s':

ssl_mode = True

@@ -72,9 +82,12 @@ def main():

"Or use the -C parameter to create the directory.".format(app_config['datastore_path']), file=sys.stderr)

sys.exit(2)

+

datastore = store.ChangeDetectionStore(datastore_path=app_config['datastore_path'], version_tag=__version__)

app = changedetection_app(app_config, datastore)

+ signal.signal(signal.SIGTERM, sigterm_handler)

+

# Go into cleanup mode

if do_cleanup:

datastore.remove_unused_snapshots()

@@ -89,6 +102,14 @@ def main():

has_password=datastore.data['settings']['application']['password'] != False

)

+ # Monitored websites will not receive a Referer header

+ # when a user clicks on an outgoing link.

+ @app.after_request

+ def hide_referrer(response):

+ if os.getenv("HIDE_REFERER", False):

+ response.headers["Referrer-Policy"] = "no-referrer"

+ return response

+

# Proxy sub-directory support

# Set environment var USE_X_SETTINGS=1 on this script

# And then in your proxy_pass settings

@@ -111,4 +132,3 @@ def main():

else:

eventlet.wsgi.server(eventlet.listen((host, int(port))), app)

-

diff --git a/changedetectionio/content_fetcher.py b/changedetectionio/content_fetcher.py

index ca43edc8..416ed6df 100644

--- a/changedetectionio/content_fetcher.py

+++ b/changedetectionio/content_fetcher.py

@@ -6,38 +6,64 @@ import requests

import time

import sys

+

+class Non200ErrorCodeReceived(Exception):

+ def __init__(self, status_code, url, screenshot=None, xpath_data=None, page_html=None):

+ # Set this so we can use it in other parts of the app

+ self.status_code = status_code

+ self.url = url

+ self.screenshot = screenshot

+ self.xpath_data = xpath_data

+ self.page_text = None

+

+ if page_html:

+ from changedetectionio import html_tools

+ self.page_text = html_tools.html_to_text(page_html)

+ return

+

+

+class JSActionExceptions(Exception):

+ def __init__(self, status_code, url, screenshot, message=''):

+ self.status_code = status_code

+ self.url = url

+ self.screenshot = screenshot

+ self.message = message

+ return

+

class PageUnloadable(Exception):

- def __init__(self, status_code, url):

+ def __init__(self, status_code, url, screenshot=False, message=False):

# Set this so we can use it in other parts of the app

self.status_code = status_code

self.url = url

+ self.screenshot = screenshot

+ self.message = message

return

- pass

class EmptyReply(Exception):

- def __init__(self, status_code, url):

+ def __init__(self, status_code, url, screenshot=None):

# Set this so we can use it in other parts of the app

self.status_code = status_code

self.url = url

+ self.screenshot = screenshot

return

- pass

class ScreenshotUnavailable(Exception):

- def __init__(self, status_code, url):

+ def __init__(self, status_code, url, page_html=None):

# Set this so we can use it in other parts of the app

self.status_code = status_code

self.url = url

+ if page_html:

+ from html_tools import html_to_text

+ self.page_text = html_to_text(page_html)

return

- pass

class ReplyWithContentButNoText(Exception):

- def __init__(self, status_code, url):

+ def __init__(self, status_code, url, screenshot=None):

# Set this so we can use it in other parts of the app

self.status_code = status_code

self.url = url

+ self.screenshot = screenshot

return

- pass

-

class Fetcher():

error = None

@@ -63,12 +89,12 @@ class Fetcher():

break;

}

if('' !==r.id) {

- chained_css.unshift("#"+r.id);

- final_selector= chained_css.join('>');

+ chained_css.unshift("#"+CSS.escape(r.id));

+ final_selector= chained_css.join(' > ');

// Be sure theres only one, some sites have multiples of the same ID tag :-(

if (window.document.querySelectorAll(final_selector).length ==1 ) {

return final_selector;

- }

+ }

return null;

} else {

chained_css.unshift(r.tagName.toLowerCase());

@@ -180,7 +206,7 @@ class Fetcher():

system_https_proxy = os.getenv('HTTPS_PROXY')

# Time ONTOP of the system defined env minimum time

- render_extract_delay=0

+ render_extract_delay = 0

@abstractmethod

def get_error(self):

@@ -267,7 +293,15 @@ class base_html_playwright(Fetcher):

# allow per-watch proxy selection override

if proxy_override:

- self.proxy = {'server': proxy_override}

+ # https://playwright.dev/docs/network#http-proxy

+ from urllib.parse import urlparse

+ parsed = urlparse(proxy_override)

+ proxy_url = "{}://{}:{}".format(parsed.scheme, parsed.hostname, parsed.port)

+ self.proxy = {'server': proxy_url}

+ if parsed.username:

+ self.proxy['username'] = parsed.username

+ if parsed.password:

+ self.proxy['password'] = parsed.password

def run(self,

url,

@@ -282,6 +316,7 @@ class base_html_playwright(Fetcher):

import playwright._impl._api_types

from playwright._impl._api_types import Error, TimeoutError

response = None

+

with sync_playwright() as p:

browser_type = getattr(p, self.browser_type)

@@ -319,40 +354,63 @@ class base_html_playwright(Fetcher):

with page.expect_navigation():

response = page.goto(url, wait_until='load')

- if self.webdriver_js_execute_code is not None:

- page.evaluate(self.webdriver_js_execute_code)

except playwright._impl._api_types.TimeoutError as e:

context.close()

browser.close()

# This can be ok, we will try to grab what we could retrieve

pass

+

except Exception as e:

- print ("other exception when page.goto")

- print (str(e))

+ print("other exception when page.goto")

+ print(str(e))

context.close()

browser.close()

- raise PageUnloadable(url=url, status_code=None)

+ raise PageUnloadable(url=url, status_code=None, message=e.message)

if response is None:

context.close()

browser.close()

- print ("response object was none")

+ print("response object was none")

raise EmptyReply(url=url, status_code=None)

- # Bug 2(?) Set the viewport size AFTER loading the page

- page.set_viewport_size({"width": 1280, "height": 1024})

+

+ # Removed browser-set-size, seemed to be needed to make screenshots work reliably in older playwright versions

+ # Was causing exceptions like 'waiting for page but content is changing' etc

+ # https://www.browserstack.com/docs/automate/playwright/change-browser-window-size 1280x720 should be the default

+

extra_wait = int(os.getenv("WEBDRIVER_DELAY_BEFORE_CONTENT_READY", 5)) + self.render_extract_delay

time.sleep(extra_wait)

+

+ if self.webdriver_js_execute_code is not None:

+ try:

+ page.evaluate(self.webdriver_js_execute_code)

+ except Exception as e:

+ # Is it possible to get a screenshot?

+ error_screenshot = False

+ try:

+ page.screenshot(type='jpeg',

+ clip={'x': 1.0, 'y': 1.0, 'width': 1280, 'height': 1024},

+ quality=1)

+

+ # The actual screenshot

+ error_screenshot = page.screenshot(type='jpeg',

+ full_page=True,

+ quality=int(os.getenv("PLAYWRIGHT_SCREENSHOT_QUALITY", 72)))

+ except Exception as s:

+ pass

+

+ raise JSActionExceptions(status_code=response.status, screenshot=error_screenshot, message=str(e), url=url)

+

+ else:

+ # JS eval was run, now we also wait some time if possible to let the page settle

+ if self.render_extract_delay:

+ page.wait_for_timeout(self.render_extract_delay * 1000)

+

+ page.wait_for_timeout(500)

+

self.content = page.content()

self.status_code = response.status

-

- if len(self.content.strip()) == 0:

- context.close()

- browser.close()

- print ("Content was empty")

- raise EmptyReply(url=url, status_code=None)

-

self.headers = response.all_headers()

if current_css_filter is not None:

@@ -379,9 +437,17 @@ class base_html_playwright(Fetcher):

browser.close()

raise ScreenshotUnavailable(url=url, status_code=None)

+ if len(self.content.strip()) == 0:

+ context.close()

+ browser.close()

+ print("Content was empty")

+ raise EmptyReply(url=url, status_code=None, screenshot=self.screenshot)

+

context.close()

browser.close()

+ if not ignore_status_codes and self.status_code!=200:

+ raise Non200ErrorCodeReceived(url=url, status_code=self.status_code, page_html=self.content, screenshot=self.screenshot)

class base_html_webdriver(Fetcher):

if os.getenv("WEBDRIVER_URL"):

@@ -459,8 +525,6 @@ class base_html_webdriver(Fetcher):

# Selenium doesn't automatically wait for actions as good as Playwright, so wait again

self.driver.implicitly_wait(int(os.getenv("WEBDRIVER_DELAY_BEFORE_CONTENT_READY", 5)))

- self.screenshot = self.driver.get_screenshot_as_png()

-

# @todo - how to check this? is it possible?

self.status_code = 200

# @todo somehow we should try to get this working for WebDriver

@@ -471,6 +535,8 @@ class base_html_webdriver(Fetcher):

self.content = self.driver.page_source

self.headers = {}

+ self.screenshot = self.driver.get_screenshot_as_png()

+

# Does the connection to the webdriver work? run a test connection.

def is_ready(self):

from selenium import webdriver

@@ -509,7 +575,12 @@ class html_requests(Fetcher):

ignore_status_codes=False,

current_css_filter=None):

- proxies={}

+ # Make requests use a more modern looking user-agent

+ if not 'User-Agent' in request_headers:

+ request_headers['User-Agent'] = os.getenv("DEFAULT_SETTINGS_HEADERS_USERAGENT",

+ 'Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/87.0.4280.66 Safari/537.36')

+

+ proxies = {}

# Allows override the proxy on a per-request basis

if self.proxy_override:

@@ -537,10 +608,14 @@ class html_requests(Fetcher):

if encoding:

r.encoding = encoding

+ if not r.content or not len(r.content):

+ raise EmptyReply(url=url, status_code=r.status_code)

+

# @todo test this

# @todo maybe you really want to test zero-byte return pages?

- if (not ignore_status_codes and not r) or not r.content or not len(r.content):

- raise EmptyReply(url=url, status_code=r.status_code)

+ if r.status_code != 200 and not ignore_status_codes:

+ # maybe check with content works?

+ raise Non200ErrorCodeReceived(url=url, status_code=r.status_code, page_html=r.text)

self.status_code = r.status_code

self.content = r.text

diff --git a/changedetectionio/fetch_site_status.py b/changedetectionio/fetch_site_status.py

index 1b1a4827..c577c563 100644

--- a/changedetectionio/fetch_site_status.py

+++ b/changedetectionio/fetch_site_status.py

@@ -12,48 +12,37 @@ urllib3.disable_warnings(urllib3.exceptions.InsecureRequestWarning)

# Some common stuff here that can be moved to a base class

+# (set_proxy_from_list)

class perform_site_check():

+ screenshot = None

+ xpath_data = None

def __init__(self, *args, datastore, **kwargs):

super().__init__(*args, **kwargs)

self.datastore = datastore

- # If there was a proxy list enabled, figure out what proxy_args/which proxy to use

- # if watch.proxy use that

- # fetcher.proxy_override = watch.proxy or main config proxy

- # Allows override the proxy on a per-request basis

- # ALWAYS use the first one is nothing selected

+ # Doesn't look like python supports forward slash auto enclosure in re.findall

+ # So convert it to inline flag "foobar(?i)" type configuration

+ def forward_slash_enclosed_regex_to_options(self, regex):

+ res = re.search(r'^/(.*?)/(\w+)$', regex, re.IGNORECASE)

- def set_proxy_from_list(self, watch):

- proxy_args = None

- if self.datastore.proxy_list is None:

- return None

-

- # If its a valid one

- if any([watch['proxy'] in p for p in self.datastore.proxy_list]):

- proxy_args = watch['proxy']

-

- # not valid (including None), try the system one

+ if res:

+ regex = res.group(1)

+ regex += '(?{})'.format(res.group(2))

else:

- system_proxy = self.datastore.data['settings']['requests']['proxy']

- # Is not None and exists

- if any([system_proxy in p for p in self.datastore.proxy_list]):

- proxy_args = system_proxy

+ regex += '(?{})'.format('i')

- # Fallback - Did not resolve anything, use the first available

- if proxy_args is None:

- proxy_args = self.datastore.proxy_list[0][0]

+ return regex

- return proxy_args

def run(self, uuid):

- timestamp = int(time.time()) # used for storage etc too

-

changed_detected = False

screenshot = False # as bytes

stripped_text_from_html = ""

- watch = self.datastore.data['watching'][uuid]

+ watch = self.datastore.data['watching'].get(uuid)

+ if not watch:

+ return

# Protect against file:// access

if re.search(r'^file', watch['url'], re.IGNORECASE) and not os.getenv('ALLOW_FILE_URI', False):

@@ -64,7 +53,7 @@ class perform_site_check():

# Unset any existing notification error

update_obj = {'last_notification_error': False, 'last_error': False}

- extra_headers = self.datastore.get_val(uuid, 'headers')

+ extra_headers =self.datastore.data['watching'][uuid].get('headers')

# Tweak the base config with the per-watch ones

request_headers = self.datastore.data['settings']['headers'].copy()

@@ -88,11 +77,11 @@ class perform_site_check():

if 'Accept-Encoding' in request_headers and "br" in request_headers['Accept-Encoding']:

request_headers['Accept-Encoding'] = request_headers['Accept-Encoding'].replace(', br', '')

- timeout = self.datastore.data['settings']['requests']['timeout']

- url = self.datastore.get_val(uuid, 'url')

- request_body = self.datastore.get_val(uuid, 'body')

- request_method = self.datastore.get_val(uuid, 'method')

- ignore_status_code = self.datastore.get_val(uuid, 'ignore_status_codes')

+ timeout = self.datastore.data['settings']['requests'].get('timeout')

+ url = watch.get('url')

+ request_body = self.datastore.data['watching'][uuid].get('body')

+ request_method = self.datastore.data['watching'][uuid].get('method')

+ ignore_status_codes = self.datastore.data['watching'][uuid].get('ignore_status_codes', False)

# source: support

is_source = False

@@ -108,9 +97,13 @@ class perform_site_check():

# If the klass doesnt exist, just use a default

klass = getattr(content_fetcher, "html_requests")

+ proxy_id = self.datastore.get_preferred_proxy_for_watch(uuid=uuid)

+ proxy_url = None

+ if proxy_id:

+ proxy_url = self.datastore.proxy_list.get(proxy_id).get('url')

+ print ("UUID {} Using proxy {}".format(uuid, proxy_url))

- proxy_args = self.set_proxy_from_list(watch)

- fetcher = klass(proxy_override=proxy_args)

+ fetcher = klass(proxy_override=proxy_url)

# Configurable per-watch or global extra delay before extracting text (for webDriver types)

system_webdriver_delay = self.datastore.data['settings']['application'].get('webdriver_delay', None)

@@ -122,9 +115,12 @@ class perform_site_check():

if watch['webdriver_js_execute_code'] is not None and watch['webdriver_js_execute_code'].strip():

fetcher.webdriver_js_execute_code = watch['webdriver_js_execute_code']

- fetcher.run(url, timeout, request_headers, request_body, request_method, ignore_status_code, watch['css_filter'])

+ fetcher.run(url, timeout, request_headers, request_body, request_method, ignore_status_codes, watch['css_filter'])

fetcher.quit()

+ self.screenshot = fetcher.screenshot

+ self.xpath_data = fetcher.xpath_data

+

# Fetching complete, now filters

# @todo move to class / maybe inside of fetcher abstract base?

@@ -158,12 +154,15 @@ class perform_site_check():

has_filter_rule = True

if has_filter_rule:

- if 'json:' in css_filter_rule:

- stripped_text_from_html = html_tools.extract_json_as_string(content=fetcher.content, jsonpath_filter=css_filter_rule)

+ json_filter_prefixes = ['json:', 'jq:']

+ if any(prefix in css_filter_rule for prefix in json_filter_prefixes):

+ stripped_text_from_html = html_tools.extract_json_as_string(content=fetcher.content, json_filter=css_filter_rule)

is_html = False

if is_html or is_source:

+

# CSS Filter, extract the HTML that matches and feed that into the existing inscriptis::get_text

+ fetcher.content = html_tools.workarounds_for_obfuscations(fetcher.content)

html_content = fetcher.content

# If not JSON, and if it's not text/plain..

@@ -206,7 +205,7 @@ class perform_site_check():

# Treat pages with no renderable text content as a change? No by default

empty_pages_are_a_change = self.datastore.data['settings']['application'].get('empty_pages_are_a_change', False)

if not is_json and not empty_pages_are_a_change and len(stripped_text_from_html.strip()) == 0:

- raise content_fetcher.ReplyWithContentButNoText(url=url, status_code=200)

+ raise content_fetcher.ReplyWithContentButNoText(url=url, status_code=fetcher.get_last_status_code(), screenshot=screenshot)

# We rely on the actual text in the html output.. many sites have random script vars etc,

# in the future we'll implement other mechanisms.

@@ -226,15 +225,27 @@ class perform_site_check():

if len(extract_text) > 0:

regex_matched_output = []

for s_re in extract_text:

- result = re.findall(s_re.encode('utf8'), stripped_text_from_html,

- flags=re.MULTILINE | re.DOTALL | re.LOCALE)

- if result:

- regex_matched_output = regex_matched_output + result

+ # incase they specified something in '/.../x'

+ regex = self.forward_slash_enclosed_regex_to_options(s_re)

+ result = re.findall(regex.encode('utf-8'), stripped_text_from_html)

+

+ for l in result:

+ if type(l) is tuple:

+ #@todo - some formatter option default (between groups)

+ regex_matched_output += list(l) + [b'\n']

+ else:

+ # @todo - some formatter option default (between each ungrouped result)

+ regex_matched_output += [l] + [b'\n']

+ # Now we will only show what the regex matched

+ stripped_text_from_html = b''

+ text_content_before_ignored_filter = b''

if regex_matched_output:

- stripped_text_from_html = b'\n'.join(regex_matched_output)

+ # @todo some formatter for presentation?

+ stripped_text_from_html = b''.join(regex_matched_output)

text_content_before_ignored_filter = stripped_text_from_html

+

# Re #133 - if we should strip whitespaces from triggering the change detected comparison

if self.datastore.data['settings']['application'].get('ignore_whitespace', False):

fetched_md5 = hashlib.md5(stripped_text_from_html.translate(None, b'\r\n\t ')).hexdigest()

@@ -296,4 +307,4 @@ class perform_site_check():

if not watch.get('previous_md5'):

watch['previous_md5'] = fetched_md5

- return changed_detected, update_obj, text_content_before_ignored_filter, fetcher.screenshot, fetcher.xpath_data

+ return changed_detected, update_obj, text_content_before_ignored_filter

diff --git a/changedetectionio/forms.py b/changedetectionio/forms.py

index 96ac0e68..627c8561 100644

--- a/changedetectionio/forms.py

+++ b/changedetectionio/forms.py

@@ -303,22 +303,44 @@ class ValidateCSSJSONXPATHInput(object):

# Re #265 - maybe in the future fetch the page and offer a

# warning/notice that its possible the rule doesnt yet match anything?

+ if not self.allow_json:

+ raise ValidationError("jq not permitted in this field!")

+

+ if 'jq:' in line:

+ try:

+ import jq

+ except ModuleNotFoundError:

+ # `jq` requires full compilation in windows and so isn't generally available

+ raise ValidationError("jq not support not found")

+

+ input = line.replace('jq:', '')

+

+ try:

+ jq.compile(input)

+ except (ValueError) as e:

+ message = field.gettext('\'%s\' is not a valid jq expression. (%s)')

+ raise ValidationError(message % (input, str(e)))

+ except:

+ raise ValidationError("A system-error occurred when validating your jq expression")

class quickWatchForm(Form):

url = fields.URLField('URL', validators=[validateURL()])

tag = StringField('Group tag', [validators.Optional()])

+ watch_submit_button = SubmitField('Watch', render_kw={"class": "pure-button pure-button-primary"})

+ edit_and_watch_submit_button = SubmitField('Edit > Watch', render_kw={"class": "pure-button pure-button-primary"})

+

# Common to a single watch and the global settings

class commonSettingsForm(Form):

-

- notification_urls = StringListField('Notification URL list', validators=[validators.Optional(), ValidateNotificationBodyAndTitleWhenURLisSet(), ValidateAppRiseServers()])

- notification_title = StringField('Notification title', default=default_notification_title, validators=[validators.Optional(), ValidateTokensList()])

- notification_body = TextAreaField('Notification body', default=default_notification_body, validators=[validators.Optional(), ValidateTokensList()])

- notification_format = SelectField('Notification format', choices=valid_notification_formats.keys(), default=default_notification_format)

+ notification_urls = StringListField('Notification URL list', validators=[validators.Optional(), ValidateAppRiseServers()])

+ notification_title = StringField('Notification title', validators=[validators.Optional(), ValidateTokensList()])

+ notification_body = TextAreaField('Notification body', validators=[validators.Optional(), ValidateTokensList()])

+ notification_format = SelectField('Notification format', choices=valid_notification_formats.keys())

fetch_backend = RadioField(u'Fetch method', choices=content_fetcher.available_fetchers(), validators=[ValidateContentFetcherIsReady()])

extract_title_as_title = BooleanField('Extract

+## Screenshots

Please :star: star :star: this project and help it grow! https://github.com/dgtlmoon/changedetection.io/

@@ -117,8 +121,8 @@ See the wiki for more information https://github.com/dgtlmoon/changedetection.io